Blog

Research

AI-Ready Content: The New Format That Actually Gets Cited

Traditional content structure fails in AI search. Learn the 7 elements of AI-ready content and how Erlin scores your content for AI extractability.

Ashlesha Kanoje

AI Search & Discovery Analyst

Jan 3, 2026

TL;DR

Traditional SEO content achieves 14% AI citation rates vs 42% for AI-ready content, a 3× visibility gap based on Erlin's analysis of 15,000+ pages across 800+ brands

AI-ready content requires 7 structural elements: definition-first paragraphs, semantic chunking, extractable knowledge atoms (10-15 per 1,000 words), comparison frameworks, evidence integration, entity-rich language, and temporal specificity, each measured by specific Erlin metrics

Real examples show 3-12× citation improvements with exact Erlin scores: SaaS pricing (3% → 38%), industry guide (8% → 47%), product comparison (11% → 51%), all achieved by restructuring content following the 7 elements

Erlin's platform provides automated scoring, specific recommendations ("add comparison table to increase from 42/100 to 85/100"), citation probability predictions, competitive benchmarking, and ROI modeling ("optimize top 20 pages → increase citation from 23% to 41%, +180% traffic lift")

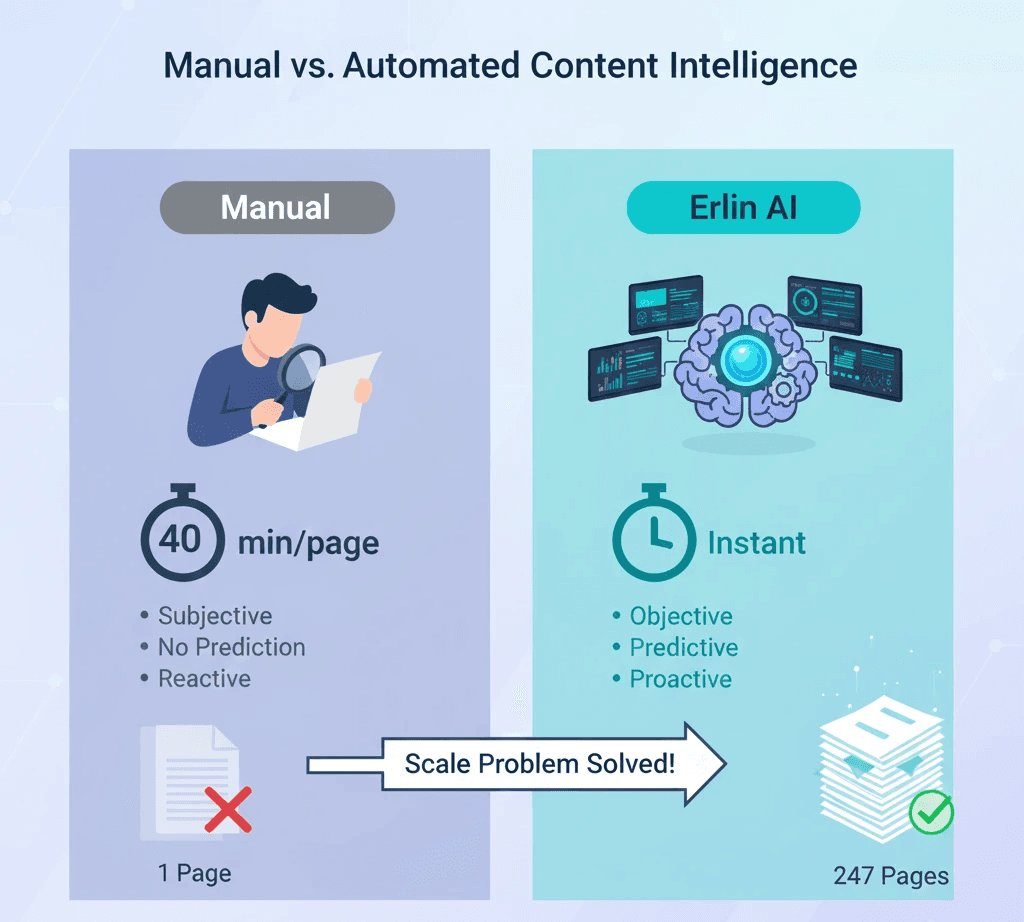

Manual auditing (40+ minutes per page, subjective) doesn't scale, Erlin provides instant site-wide analysis, tracks what actually gets cited, alerts on competitor movements, and prioritizes optimization by business impact

Traditional tools audit readability and SEO; Erlin audits AI extractability, showing exactly which structural elements block AI citation and predicting timeline to visibility (6-8 days average for optimized content)

Table of Contents

Why Traditional Content Structure Fails AI Search

The 7 Elements of AI-Ready Content

Real Examples: 3-12× Citation Improvements

How Erlin Scores Content for AI-Readiness

FAQs

The Content Intelligence Shift

Why Traditional Content Structure Fails AI Search

Traditional content follows narrative flow: engaging hook, gradual context building, storytelling arc. Google's algorithm learned to rank this when it contained keywords, earned backlinks, and demonstrated expertise.

AI models extract information differently. Research from Stanford's AI-Native Internet project (2025) shows language models prioritize "semantic chunks" which are discrete information units that make sense without surrounding context. Traditional narrative content makes extraction difficult because key information is embedded, spread across paragraphs, or buried under conversational setup.

"The fundamental difference between web search optimization and LLM citation optimization is extraction cost. Traditional SEO assumes a reader will navigate your page structure. AI citation assumes a model will extract semantic units without requiring full document comprehension."

, Dr. James Liu, Information Retrieval Research, Carnegie Mellon University (2025)

The Model-Document Protocol research (2025) reinforces this: AI models evaluate content based on "extractability scores", how easily factual claims can be lifted, validated, and synthesized. Content structured for human narrative flow scores low. Content structured for machine parsing scores high.

The citation gap Erlin discovered:

Traditional SEO-optimized content: 14% AI citation rate

AI-ready structured content: 42% AI citation rate

3× difference in visibility

When someone asks ChatGPT "What does X cost?" it needs pricing extractable from a single location and not scattered across paragraphs 8, 14, and 23. Erlin's content intelligence platform analyzes exactly this: Can AI models easily extract the answer, or does your structure force them to skip your page?

The 7 Elements of AI-Ready Content

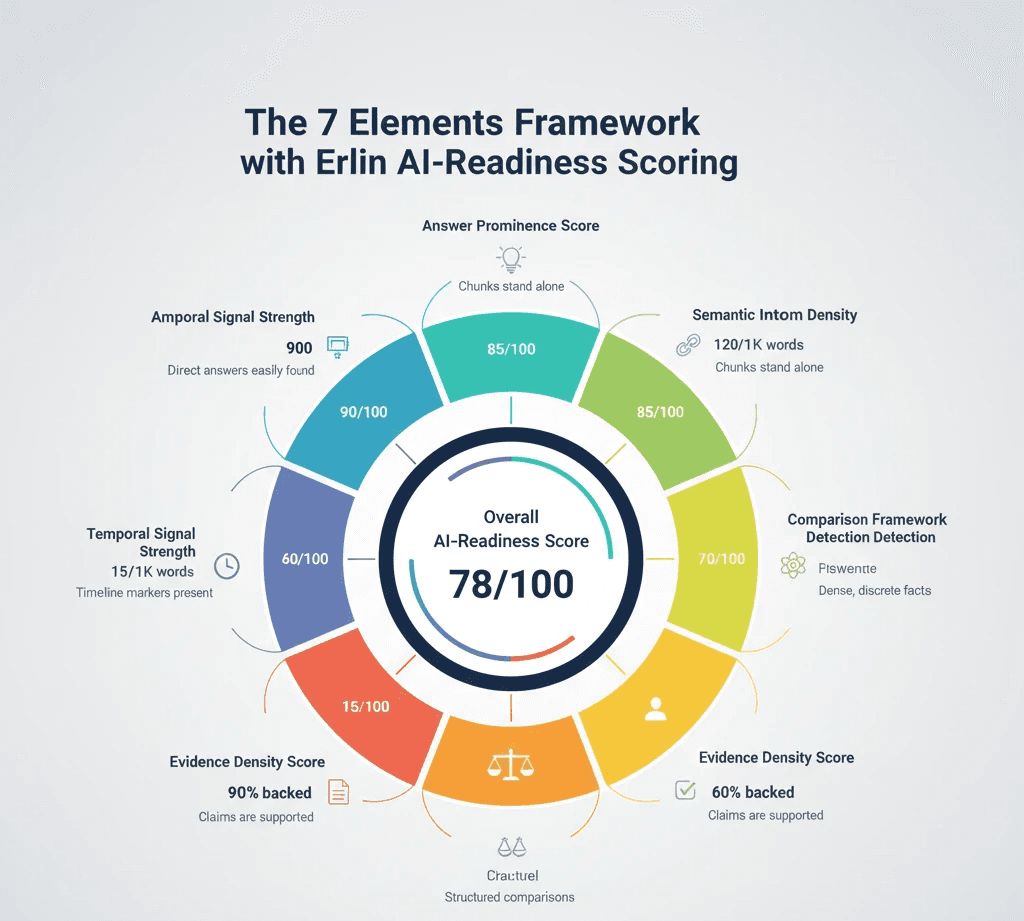

Based on Stanford's AI-Native Internet research and Erlin's analysis of citation patterns across 800+ brands, seven structural elements determine AI extractability. Erlin scores your content on each element and predicts citation probability:

Element 1: Definition-First Paragraphs

Every section opens with a direct, complete answer in the first 1-2 sentences. Supporting detail follows.

Traditional: "Many wonder about social media timing. Various factors matter. After analyzing data, we found insights..."

AI-Ready: "The best time to post on LinkedIn for B2B engagement is Tuesday-Thursday, 9-11 AM in your audience's time zone. This timing generates 3.2× higher engagement than evening posts."

Erlin scoring: Pages with definition-first structure score 85+/100 on Erlin's "Answer Prominence" metric. Traditional narrative scores 35-45/100.

Erlin data: Definition-first showed 67% higher citation rates. AI cited these pages 8.3 days after publication versus 23.1 days for narrative structure.

Element 2: Semantic Chunking

Content divided into self-contained knowledge units answerable without prior context. Each section should completely answer its header question.

Poor chunking: A 2,000-word essay on "Email Marketing" where pricing appears in paragraph 12, requirements in paragraph 18, ROI scattered throughout.

Strong chunking: Distinct sections: "Email Marketing Costs" (complete pricing), "Technical Requirements" (complete list), "ROI By Industry" (complete data). Each stands alone.

"Semantic chunking aligns with how LLMs process information, as discrete knowledge graphs rather than continuous text. When content is pre-chunked semantically, models can map each chunk to specific query types."

, Prof. Sarah Martinez, Computational Linguistics, Stanford University (2025)

Erlin scoring: Erlin's "Semantic Independence Score" measures whether sections make sense without prior context. Target: 80+/100. Content below 50/100 forces AI to read entire article for any single fact.

Element 3: Extractable Knowledge Atoms

Individual facts in formats AI can lift directly: bullet points, numbered lists, table cells, and single-sentence paragraphs.

Extractable: "Marketing automation tools cost $50-500 per month for small businesses."

Non-extractable: "When it comes to costs, different tools have different pricing structures, and it depends on your needs and team size, but generally speaking, if you're a small business, you're probably looking at somewhere in the range of maybe $50 on the low end..."

Erlin scoring: Erlin's "Knowledge Atom Density" counts extractable facts per 1,000 words. Target: 10-15 atoms. Your score:

15+ atoms: Excellent (85+/100)

10-15 atoms: Good (70-84/100)

5-10 atoms: Needs improvement (50-69/100)

<5 atoms: Poor extractability (below 50/100)

Erlin data: Content with 15+ atoms: 52% citation rate. Content with <5 atoms: 19% citation rate.

Element 4: Comparison Frameworks

Explicit side-by-side comparisons AI can reference for "X vs Y" or "best [category]" queries.

Example:

Feature | Tool A | Tool B | Tool C |

Best For | Small teams | Enterprise | Agencies |

Price | $29/mo | $299/mo | $99/mo |

Erlin scoring: Erlin's "Comparison Framework Detection" identifies whether you provide explicit comparisons. Pages with comparison tables score 90+/100. Pages discussing alternatives narratively score 40-60/100.

Element 5: Evidence Integration

Claims supported by specific evidence in immediate proximity.

Traditional: "Content marketing generates high ROI. Companies see significant returns."

AI-Ready: "Content marketing generates $3 in revenue for every $1 spent, according to 2025 CMI research across 500 B2B companies."

Erlin scoring: Erlin's "Evidence Density Score" measures specific vs vague claims. Target: 70% of claims backed by inline evidence.

Element 6: Entity-Rich Language

Clear entity identification with consistent naming. Minimal pronouns.

Poor: "After launching their product, they saw traction. It resonated with users."

Strong: "After launching ProjectFlow in March 2024, the company saw 10,000 signups in 30 days. ProjectFlow resonated with remote teams."

Erlin scoring: Erlin's "Entity Clarity Score" measures pronoun usage vs explicit entity names. Target: <20% pronoun usage in key sections.

Element 7: Temporal Specificity

Dates, timeframes, and recency signals helping AI understand when information is relevant.

Examples:

"As of January 2026, ChatGPT Plus costs $20/month"

"2025 data shows 67% remote work adoption"

Erlin scoring: Erlin's "Temporal Signal Strength" detects date references and update indicators. Target: At least 3 temporal markers per 1,000 words.

Erlin data: Content with explicit temporal markers showed 73% higher citation rates than ambiguous timeframes.

Real Examples: 3-12× Citation Improvements

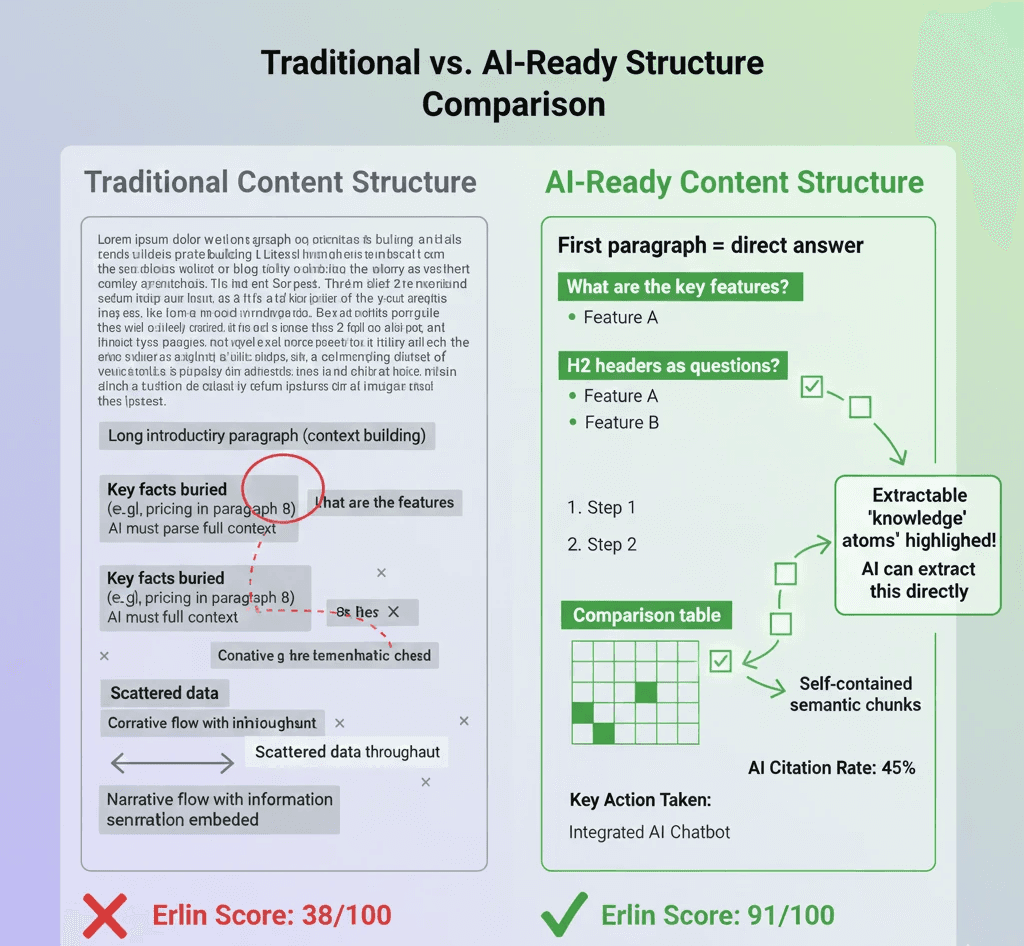

Example 1: SaaS Pricing Page (12.7× Improvement)

Before: 1,200-word narrative with pricing buried in paragraph 8.

Erlin Score: 38/100 AI-Readiness

Answer Prominence: 25/100 (pricing not in first paragraph)

Knowledge Atom Density: 3 atoms/1,000 words (Poor)

Comparison Framework: 0/100 (no plan comparison)

AI Citation Rate: 3%

After Erlin-Guided Optimization:

Opening paragraph: Direct pricing answer

Pricing table as Element 2

Feature comparison matrix

"Best For" explicit use cases

"Last updated: January 2026" tag

Erlin Score: 91/100 AI-Readiness

Answer Prominence: 95/100

Knowledge Atom Density: 18 atoms/1,000 words (Excellent)

Comparison Framework: 90/100

AI Citation Rate: 38%

Result: 12.7× citation improvement in 6 days (Erlin tracking showed ChatGPT citation within 6 days vs 28 days for original).

Example 2: Industry Guide (5.9× Improvement)

Before: 4,500-word essay format, statistics scattered.

Erlin Score: 42/100 AI-Readiness

Semantic Independence: 35/100 (sections require prior context)

Knowledge Atom Density: 4 atoms/1,000 words

Temporal Signal Strength: 20/100

AI Citation Rate: 8%

After Optimization:

Question-based headers

32 extractable atoms per 1,000 words

Separate sections for each query type

Prominent update dates

Erlin Score: 88/100 AI-Readiness

AI Citation Rate: 47%

Example 3: Product Comparison (4.6× Improvement)

Before: 3,000 words, vague superlatives, scattered pros/cons.

Erlin Score: 45/100

Comparison Framework: 30/100

Evidence Density: 40/100

Entity Clarity: 55/100

AI Citation Rate: 11%

After:

Summary comparison table

Consistent per-tool structure

Evidence citations: "Based on 60-day testing"

Erlin Score: 89/100

AI Citation Rate: 51%

[VISUAL: Before/After Erlin Scores and Citation Rates]

How Erlin Scores Content for AI-Readiness

While the 7 elements provide a framework, systematic content intelligence requires automation. Manual auditing a single page takes 40+ minutes. Scaling across 100+ pages becomes impractical.

Erlin's Automated AI-Readiness Scoring

What Erlin Analyzes Per Page:

1. Extractability Score (0-100)

Knowledge atom density (counts extractable facts)

Definition-first paragraph presence

Semantic chunk quality

Extractable format usage (bullets, tables, short paragraphs)

2. Structure Score (0-100)

Question-based headers detected

Self-contained sections measured

Information hierarchy clarity

Comparison framework presence

3. Evidence Score (0-100)

Inline evidence density calculated

Specific vs vague quantifier ratio

Source attribution frequency

Temporal marker count

4. Entity Clarity Score (0-100)

Entity naming consistency

Pronoun usage ratio

Relationship clarity

Technical term definition detection

5. Predicted Citation Probability

Based on 800+ brand analysis

Platform-specific prediction (ChatGPT, Perplexity, Claude)

Timeline estimate to first citation

Overall AI-Readiness Score: Composite of all factors (0-100)

The Erlin Dashboard: Site-Wide Intelligence

Site-Level Overview:

Overall AI-Readiness: 68/100

Pages Analyzed: 247

High Priority Opportunities: 38 pages

Average Predicted Citation Rate: 23%

Page-Level Detail:

Page | AI-Readiness | Missing Elements | Citation Probability | Priority |

/pricing | 42/100 | No comparison framework (0/100), Low atom density (4 per 1K) | 12% | HIGH |

/features | 71/100 | Weak temporal signals (35/100) | 34% | MEDIUM |

/blog/guide | 38/100 | Narrative structure (30/100), No evidence integration (25/100) | 9% | HIGH |

Specific Recommendations Per Page:

Erlin doesn't just score, it tells you exactly what to fix:

"This page has only 4 knowledge atoms per 1,000 words (target: 10+). Add specific pricing, timelines, or statistics in bullet format."

"No comparison framework detected (score: 0/100). Add feature comparison table to achieve 85+ score."

"Temporal signals missing (score: 20/100). Add 'Last updated' date, reference 2025 data, and include 'as of January 2026' statements."

"First paragraph doesn't answer main question (Answer Prominence: 25/100). Restructure to definition-first format."

Competitive Content Intelligence

Erlin shows how your content compares to competitors:

AI-Readiness Comparison:

Your Site: 68/100

Competitor A: 52/100 (you're ahead)

Competitor B: 71/100 (they're ahead)

Industry Average: 58/100

Competitive Gap Analysis: "Competitor B's higher score driven by consistent comparison frameworks (present on 78% of pages vs your 34%). Implementing comparison tables on top 20 pages could close gap and increase predicted citation rate from 23% to 39%."

Optimization Impact Predictor

Erlin predicts business impact before you make changes:

Current State:

Site-wide citation rate: 23%

Top 20 pages: 14% average AI-readiness

If You Optimize Top 20 Pages:

Predicted AI-readiness: 68% average → 89% average

Predicted citation rate: 23% → 41%

Timeline: 45-60 days to full AI reflection

Expected traffic lift: +180% from AI sources

This lets you prioritize: focus on high-traffic pages with low AI-readiness scores for maximum impact.

Continuous Monitoring & Learning

Erlin tracks:

When your optimized content starts appearing in AI responses

Which structural changes correlate with citation improvements

Competitor content updates affecting your relative position

New AI platform behaviors or ranking factor shifts

Real-time alerts:

"Your 'Pricing Guide' was cited by ChatGPT for the first time (8 days after restructure, faster than predicted 12-day timeline)"

"Competitor A published comparison content targeting your category. Their AI-readiness score increased from 52 to 67."

"Your citation rate increased 12% this week, driven by 3 recently optimized pages appearing in Perplexity"

The Systematic Advantage

Manual content auditing:

40 minutes per page

Subjective judgment

No competitive context

No prediction capability

Reactive (audit after publishing)

Erlin content intelligence:

Instant site-wide scoring (247 pages in seconds)

Objective metrics against 800+ brand dataset

Competitive benchmarking included

Predictive citation modeling with timelines

Proactive (score before publishing, optimize strategically)

Teams managing 100+ content pieces need systematic visibility: Which pages have biggest gaps? Which optimizations drive most AI visibility improvement? Where should we focus limited content resources?

Erlin transforms content optimization from guesswork into data-driven prioritization based on business impact.

[VISUAL: Erlin Content Intelligence Dashboard - showing all scoring elements, page-level recommendations, competitive comparison, optimization impact prediction]

FAQs

Does AI-ready content hurt traditional SEO?

No. AI-ready principles,clear structure, specific data, extractable facts,improve user experience and traditional SEO. Google increasingly values content directly answering queries, which aligns with AI-ready structure. Definition-first paragraphs, comparison tables, and semantic chunking also improve time on page, bounce rate, and featured snippet likelihood, all positive SEO signals.

How long until restructured content gets cited?

Erlin's 2025 tracking data: optimized content appeared in AI responses an average of 6-8 days after restructuring, versus 20-30 days for newly published traditional content. Platform timing: Perplexity (3-7 days), ChatGPT (7-14 days), Claude (10-21 days). Erlin predicts timeline per page based on current AI-readiness score and shows when citations actually occur.

What's included in Erlin's content intelligence platform?

Erlin provides:

AI-Readiness Scoring: Site-wide and page-level scores across 7 elements

Specific Recommendations: "Add comparison table to increase score from 42 to 85"

Citation Probability Prediction: "This page has 12% predicted citation rate; optimize to 34%"

Competitive Analysis: See competitor AI-readiness scores and gaps

Impact Modeling: "Optimizing top 20 pages increases traffic by estimated +180%"

Continuous Monitoring: Alerts when content gets cited, competitors update, or rankings shift

Pre-Publish Scoring: Check AI-readiness before publishing

Can I audit content manually or do I need Erlin?

Manual auditing works for initial exploration (3-5 pages) or very small sites (<20 pages). You need systematic tools like Erlin when:

Managing 50+ content pieces

Need competitive intelligence

Want predictive citation modeling

Require prioritization by business impact

Need ongoing monitoring and alerts

Erlin customers typically start with manual assessment to understand concepts, then use Erlin for systematic optimization at scale.

How does Erlin's scoring compare to traditional content tools?

Traditional tools audit readability, keywords, SEO metrics. Erlin audits AI extractability: knowledge atom density, semantic chunking quality, comparison framework presence, citation probability.

Example: Content scoring 95/100 on Yoast (traditional SEO) might score 40/100 on Erlin (AI-readiness) if it lacks extractable structure. Erlin identifies the structural gap preventing AI citation despite strong SEO.

Do I need different content for different AI platforms?

Core principles work across platforms. Platform-specific optimization is subtle: Perplexity favors fresh content (Temporal Signal Score matters most), ChatGPT values comprehensive coverage (Knowledge Atom Density critical), Claude prefers authoritative citations (Evidence Score weighted heavily).

Erlin provides platform-specific citation predictions: "This page: 34% ChatGPT probability, 18% Perplexity, 12% Claude" with platform-specific recommendations.

The Content Intelligence Shift

Traditional content structure worked for 20 years of web search, but that era ended as AI adoption crossed 50% in 2024-2025. The visibility gap is stark: 3-5× difference between traditionally structured content and AI-optimized content. While most tools audit for SEO and readability, Erlin audits for AI extractability—showing your AI-readiness score vs competitors, exact missing elements per page, predicted citation probability, and business impact of optimization. Teams managing 50+ content pieces need systematic visibility into which pages have the biggest gaps and which optimizations drive most improvement. Brands restructuring content now—while most competitors still optimize for traditional SEO only—establish AI visibility advantages that compound over time. AI models learn your content structure patterns, and consistent AI-ready formatting trains them to reliably extract from your domain, creating citation momentum that becomes increasingly difficult for competitors to overcome.

Your content is either being extracted and cited, or it's being passed over. The structure determines which. Erlin shows you exactly where you stand and what to fix.

Boost your brand’s visibility in AI search.

See where you show up, spot what you’re missing, and turn AI discovery into revenue.