Blog

Business

ChatGPT vs Perplexity vs Claude vs Gemini: The Citation Wars

How ChatGPT, Perplexity, Claude, and Gemini cite brands differently. Analysis of 680M citations reveals why single-platform strategies fail and integrated approaches win.

Sid Tiwatnee

Founder

Jan 8, 2026

TL;DR

Programmatic SEO can generate 10,000 pages overnight. But in 2026, volume without AI visibility is a recipe for disaster.

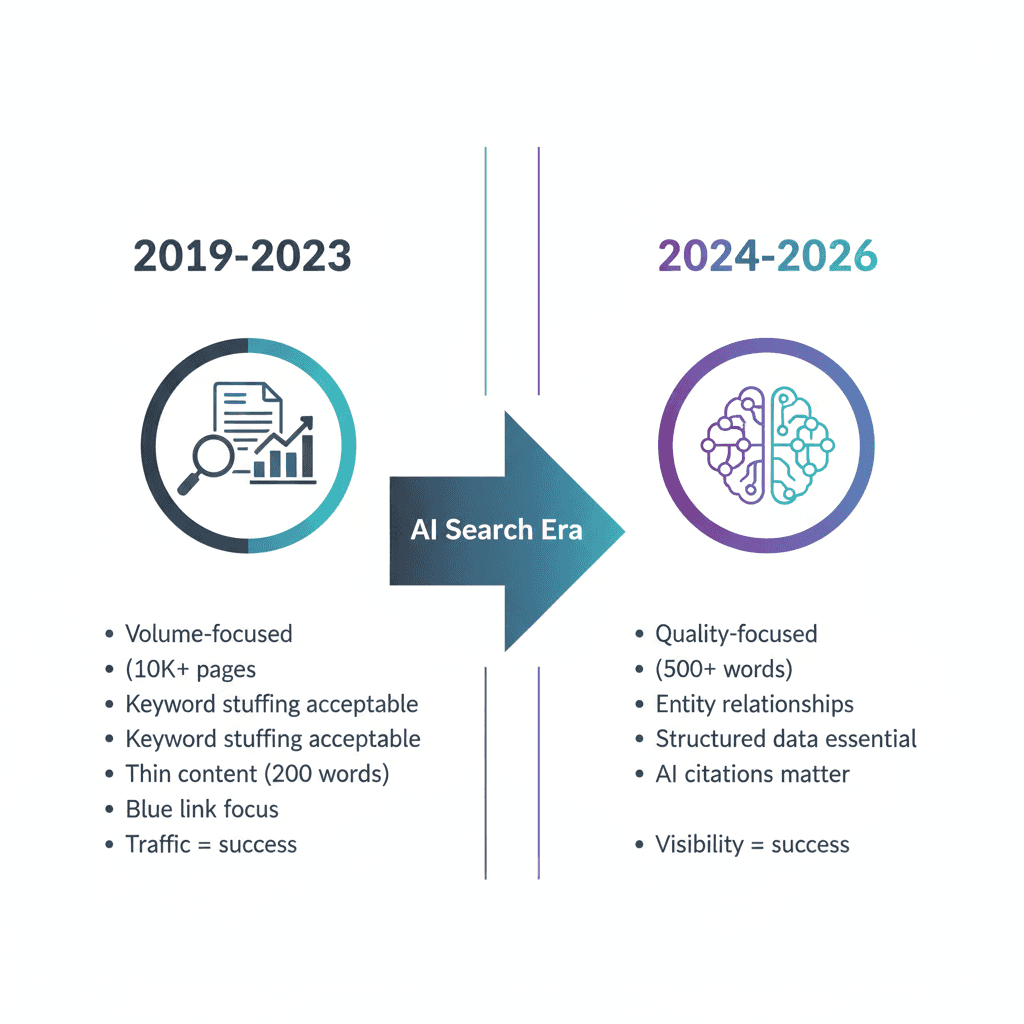

The Shift: Traditional programmatic SEO optimized for rankings and traffic. The 2026 reality requires optimizing for AI citations, zero-click visibility, and entity authority, while still maintaining scale.

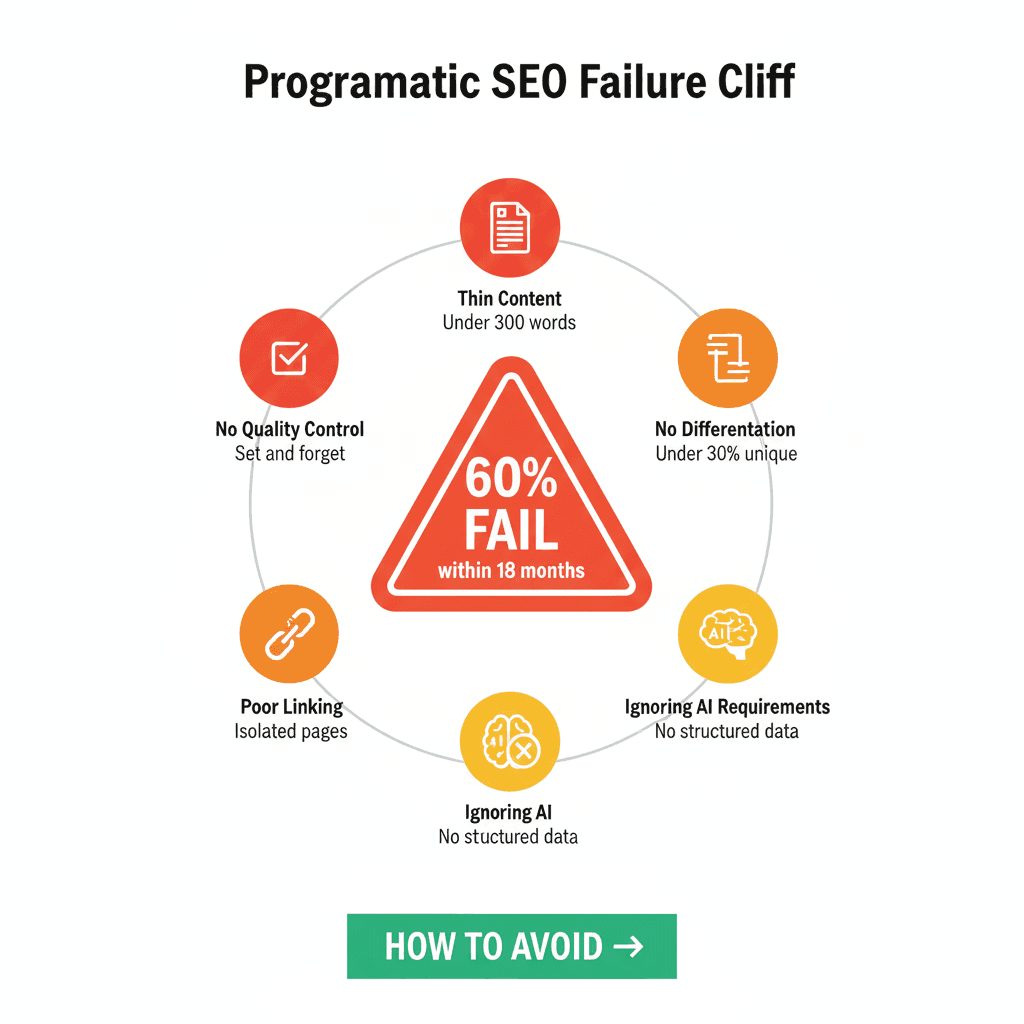

The Risk: 60% of programmatic implementations fail within 18 months. The cause? Creating pages that rank in Google but get ignored by ChatGPT, Perplexity, and AI Overviews.

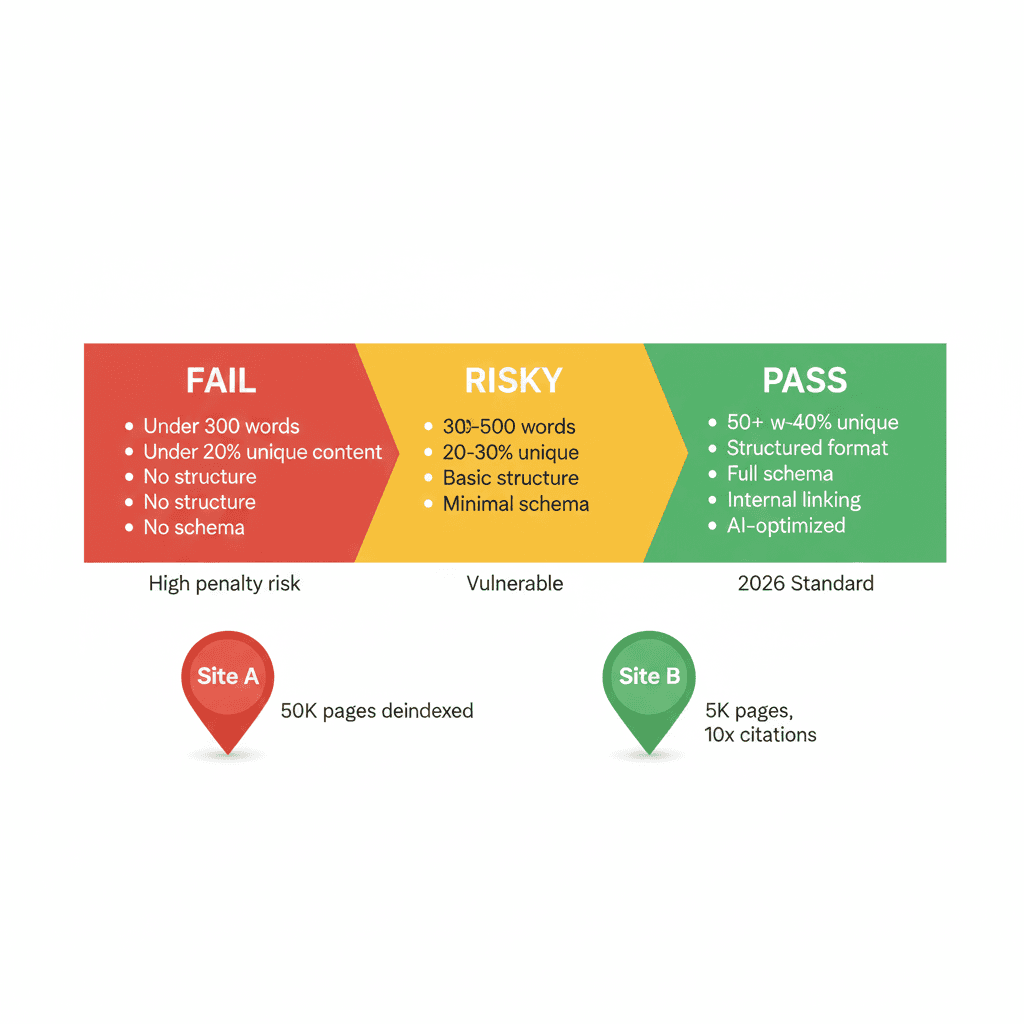

Quality Threshold: Every programmatic page now needs 500+ unique words minimum and 30-40% differentiation from the template, not just keyword swaps.

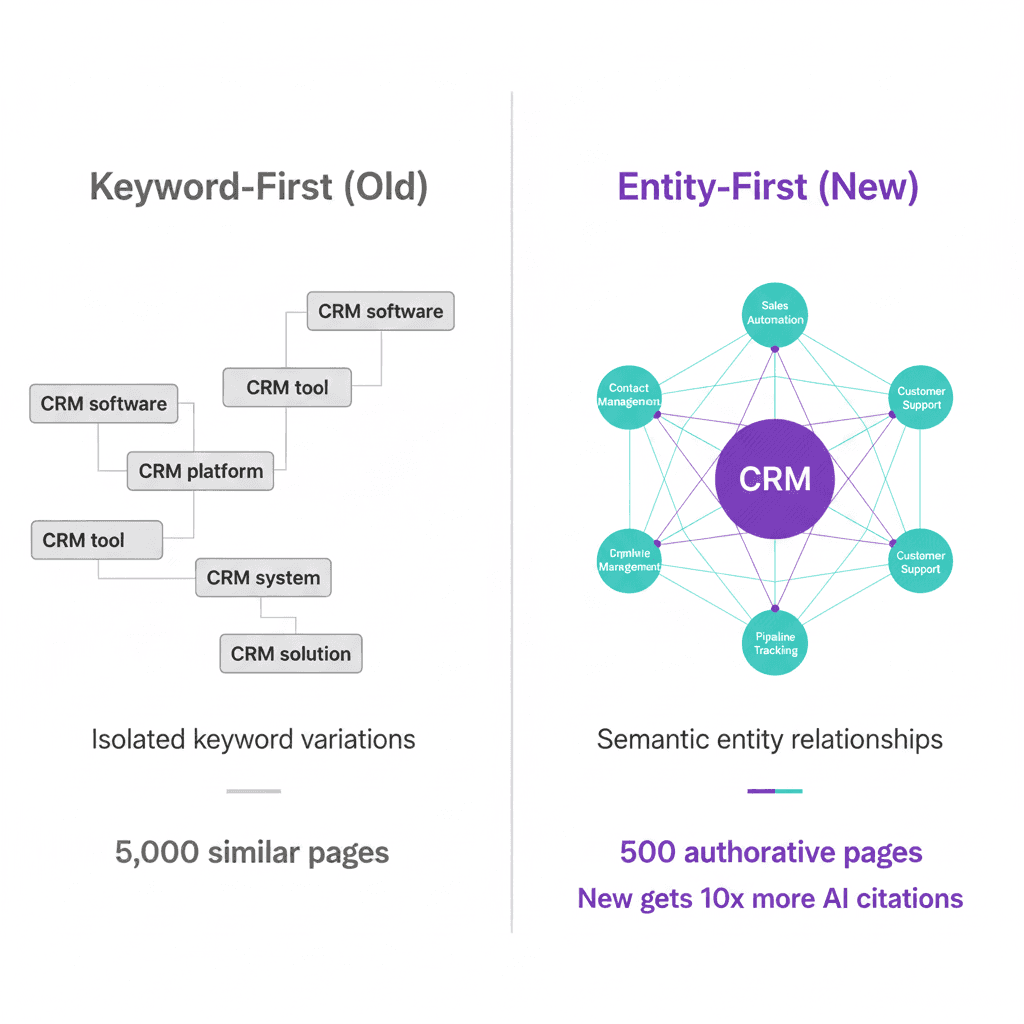

Entity-First Approach: AI systems reward semantic relationships over keyword lists. Build entity networks that show how concepts connect, not isolated keyword variations.

Dual Optimization: Traditional rankings still matter, but you also need structured content with Schema markup, FAQs, and comparative formats that AI systems can confidently cite.

New Metrics to Track: Beyond rankings and traffic, monitor citation rates, grounding events, and visibility in ChatGPT, Perplexity, and AI Overviews.

The Opportunity: Research shows 40.58% of AI citations come from Google's top 10 results. Programmatic pages that rank well and satisfy AI quality standards can dominate both ecosystems.

Bottom Line: The programmatic SEO playbook has changed. Teams that adapt to AI search requirements now will build sustainable competitive advantages. Those that don't risk building ghost towns of uncited content.

Table of Contents

What is Programmatic SEO?

How the AI Search Era Changed Programmatic SEO

The 60% Failure Rate: Why Most Programmatic SEO Fails

New Rules for Programmatic SEO in 2026

Entity-First Architecture (vs Keyword-First)

Quality Thresholds That Satisfy Both Google and AI

Content Types That Scale and Get Cited

Technical Implementation for Dual Visibility

Common Mistakes and How to Avoid Them

How Erlin Tracks Programmatic Performance Across Both Ecosystems

Getting Started: Your First 100 Pages

FAQ

What is Programmatic SEO?

Programmatic SEO is the systematic creation of content at scale using templates, structured data, and automation to target thousands, sometimes millions, of related search queries.

Instead of manually creating individual pages, you identify patterns in search behavior, create a template structure, populate it with data, and generate hundreds or thousands of variations. Each variation targets different long-tail keywords while using the same core template.

How It Works

The traditional process follows several key steps:

Identify patterns in search behavior (e.g., "[service] in [city]" or "how to connect [tool A] to [tool B]")

Create a template structure that can accommodate variations while maintaining quality

Gather structured data from databases, APIs, or other sources

Populate templates with data to generate variations at scale

Target specific long-tail keywords systematically across your niche

Classic Examples

Several major platforms have used programmatic SEO successfully:

TripAdvisor: Created "Things to do in [City]" pages for virtually every city globally without hiring writers for every location.

Zillow: Generates property listings for every address, automatically created from real estate data.

Zapier: Built integration pages for every app combination with formats like "Connect [App A] to [App B]."

Yelp: Created business directory pages for every city and category combination, scaling to millions of pages.

The Traditional Value Proposition

Programmatic SEO offers scale that manual content creation cannot match; you can create 10,000 pages in the time it takes to write 10 manually. It provides comprehensive coverage by targeting every long-tail variation in your niche. The efficiency comes from leveraging data you already have rather than creating everything from scratch. The competitive advantage emerges from dominating search results through comprehensive topic and keyword coverage.

When Programmatic SEO Makes Sense

Programmatic SEO works best when you have:

Structured data available (locations, products, integrations, comparisons)

Search queries that follow predictable patterns

1,000+ keyword variations to target

Low-to-medium competition in long-tail spaces

Commercial intent and active searchers

When It Doesn't Make Sense

If you only have 50-100 keywords to target, traditional content marketing is more effective and manageable. Content requiring deep expertise or unique insights doesn't scale well programmatically. Without structured data available, the approach becomes impractical. In highly competitive spaces requiring significant manual differentiation for each piece, programmatic approaches struggle to compete.

"Programmatic SEO isn't about gaming the system; it's about systematically serving user intent at scale. The key is ensuring every generated page provides genuine value.", Lily Ray, SEO Director, Amsive Digital

How the AI Search Era Changed Programmatic SEO

The playbook that worked in 2019 leads to failure in 2026. Here's what changed.

The Old Playbook (Pre-2024)

The traditional approach was straightforward. Create thousands of template pages targeting keyword variations. Build backlinks to establish authority. Watch rankings rise in Google's search results. Traffic follows the rankings, and revenue follows the traffic. This worked because Google showed 10 blue links, users clicked through to websites, and traffic converted into business outcomes.

What Changed in 2024-2026

Zero-Click Searches Exploded

60% of searches now end without a click. AI Overviews answer questions directly in search results. ChatGPT and Perplexity provide synthesized answers without requiring users to visit websites. Users get the information they need without clicking through. The impact is significant; ranking number one means less if users never visit your site.

Citations Replaced Rankings as the Prize

Being cited in an AI answer has become more valuable than ranking number one without a citation. Research shows 40.58% of AI citations come from Google's top 10 results, but ranking alone doesn't guarantee your content will be cited. Content quality, structure, and authority determine AI visibility. The question is no longer just "do we rank?" but "do AI systems cite us?"

New Metrics Matter

The old metrics still matter, but aren't the complete picture:

Old metrics: Rankings, traffic, click-through rate, conversions

New metrics: Citations (how often AI references your content), grounding events (when AI uses your content to verify answers), AI visibility across platforms, mention rate in ChatGPT, Claude, and Perplexity

Both traditional and AI metrics matter in 2026, but many teams only track the old ones.

Entity Authority Replaced Keyword Stuffing

AI systems prioritize semantic relationships between concepts over exact keyword matches. They focus on entity recognition, understanding people, places, organizations, and concepts as interconnected entities. Topical authority signals matter more than keyword density. Structured knowledge graphs that show how concepts relate carry more weight than isolated keyword targeting. The emphasis has shifted from "does this page have the keyword?" to "is this entity authoritative for this topic?"

Quality Thresholds Increased Dramatically

The 2019 standard accepted 200-300 words with basic keyword variations. The 2026 standard requires 500+ unique words minimum, 30-40% differentiation between pages, structured formats with proper headings and organization, and original insights beyond simple data population. The bar for what constitutes quality programmatic content has risen substantially.

"We're seeing a clear divide: sites that invested in quality programmatic content are thriving in AI search, while those that took shortcuts are experiencing traffic cliffs.", Kevin Indig, Growth Advisor

The New Reality

Traditional SEO still matters; you still need to rank in the top 10 to get cited by AI systems. Rankings provide the foundation for AI visibility. However, ranking alone isn't enough anymore. Pages that rank but lack proper structure, sufficient depth, or clear authority signals get ignored by AI engines. You face a double challenge: scale content efficiently through programmatic approaches while maintaining the quality and structure standards that AI systems require for citations.

The 60% Failure Rate: Why Most Programmatic SEO Fails

One in three programmatic implementations experiences a traffic cliff within 18 months. Understanding why this happens and how to avoid it is critical for success.

The Traffic Cliff Explained

Sites launch thousands of programmatic pages and often see initial ranking success. Rankings improve, traffic grows, and the approach seems to be working perfectly. Then comes the cliff, a sudden, severe traffic drop that can eliminate 60-90% of organic traffic in a matter of weeks. Google deindexes large portions of the site, or AI systems completely ignore the content. Recovery is difficult and often requires rebuilding from scratch.

Why Programmatic SEO Fails

Thin Content at Scale

The most common mistake is creating 10,000 pages where only city names or product names change. Pages contain 200-300 words of templated text with no unique value. Users land on the page, immediately recognize it as template content, and bounce. Google's algorithms detect the pattern and begin deindexing. AI systems find nothing worth citing. A real example: a travel site created 50,000 "hotels in [city]" pages with only city names changing. Google deindexed 98% of these pages within 3 months.

No Meaningful Differentiation

Research shows 93% of penalized sites lacked meaningful differentiation between pages. This manifests as the same structure and text across all pages, with only keywords swapped. Pages show under 30% unique content when compared to the template. There are no page-specific insights, data points, or value adds. Users can't tell one page from another except for the changed keyword.

Ignoring AI Requirements

Many sites optimize exclusively for the 2019-era Google without considering AI search requirements. These pages lack structured data, such as schema markup. They don't answer specific questions that AI systems could extract. There are no comparison tables, FAQ sections, or other structured elements. Entity relationships aren't mapped or made explicit. These pages might rank in traditional search but never get cited by AI systems.

Poor Internal Linking

Programmatic pages often exist in isolation without proper site integration. There's no hub-and-spoke architecture connecting related content. Pages aren't organized into topical clusters. The internal linking is weak or non-existent. Crawlability suffers, and link equity doesn't flow properly through the site. Google struggles to understand the relationship between pages.

No Quality Control

Many teams launch 10,000 pages without any testing or validation. There's no sampling or manual review before launch. Performance isn't monitored after launch. There's no pruning of low-performing pages. There's no optimization or improvement over time. The mentality is "set it and forget it," which inevitably leads to problems.

"The biggest mistake I see is teams treating programmatic SEO as 'set it and forget it.' The successful implementations involve ongoing monitoring, pruning, and optimization.", Aleyda Solis, International SEO Consultant

Warning Signs

Several indicators suggest your programmatic implementation might be heading for trouble. An indexation rate below 40% means Google isn't indexing most of your pages. Bounce rates above 70% indicate users aren't finding value. Time on page under 30 seconds shows users immediately leave. Zero AI citations despite good rankings means AI systems don't consider your content citable. Manual penalties or warnings from Google are the clearest red flag that something is seriously wrong.

How to Avoid the Cliff

Start small with 100-500 pages to test and validate your approach before scaling. Implement quality gates, including a minimum of 500 unique words per page and 30-40% differentiation from the template. Structured data should be mandatory on every page. Manual review of 5-10% of pages should happen before launch.

Use progressive rollout to minimize risk. Launch 100 pages in week one. Monitor performance closely in weeks two through four. If metrics are positive in week five, begin scaling. Conduct monthly pruning of consistently low-performing pages.

The reality check is simple: if you can't manually defend the value of your programmatic pages to a human reviewer, AI systems won't cite them, and Google may penalize you. Every page should provide genuine, unique value to users.

New Rules for Programmatic SEO in 2026

The fundamentals have changed. Here's the updated playbook for success.

Rule 1: Entity-First, Not Keyword-First

The old approach started with finding 10,000 keyword variations and creating pages targeting each one. The new approach begins with mapping entity relationships, creating semantic clusters that show how concepts connect, and then scaling pages that demonstrate these connections.

Start with core entities, your product, your industry, customer problems, and related concepts. Then build pages that reinforce semantic relationships between these entities. For example, instead of targeting keyword variations like "CRM software," "CRM tool," and "CRM platform," think entity-first. CRM is the core entity that connects to related entities like sales automation, contact management, and pipeline tracking. Create pages that show these relationships explicitly.

Rule 2: Quality Minimums Are Non-Negotiable

Content depth matters more than ever. Each page needs a minimum of 500 unique words; anything under 300 words carries a high penalty risk. The sweet spot for most programmatic pages is 500-1,200 words, depending on topic complexity.

Differentiation between pages must reach 30-40% at a minimum. This means actual unique content, not just swapping city or product names. Pages need meaningful value differences with page-specific insights and data.

Structure is essential. Every page needs clear H2 and H3 headings, comparison tables or lists where appropriate, FAQ sections when relevant, and specific data points with examples. AI systems can't cite content they can't parse and extract.

Rule 3: Dual Optimization (Traditional + AI)

Every programmatic page must satisfy both traditional SEO requirements and AI search requirements simultaneously. For traditional SEO, include target keywords in titles, URLs, and H1 tags. Build internal links to related pages. Optimize meta descriptions. Ensure mobile-friendly design and fast page speed.

For AI search requirements, implement structured data using Schema markup. Provide extractable, clear answers to specific questions. Include comparative information that AI systems can reference. Define entities explicitly. Create content that's genuinely worthy of citation.

Rule 4: Hub-and-Spoke Architecture

Organize content in hub-and-spoke structures. Hub pages serve as comprehensive topic overviews with strong authority signals. Spoke pages cover specific variations and link back to their hub while connecting to related spokes.

For example, a hub page on "Digital Marketing Services" might connect to spoke clusters including "SEO Services in [City]" (500 pages), "[Industry] Marketing Guide" (300 pages), and "[Service] Pricing Calculator" (200 pages). Each spoke links to its hub and to related spokes within its cluster, creating a coherent information architecture.

Rule 5: Structured Content Wins

AI engines strongly prefer certain content formats. Comparison tables showing side-by-side differences get cited frequently. FAQ format with clear question-answer pairs matches how AI systems respond to queries. Numbered and bulleted lists provide easily extractable information. Clear step-by-step processes work well for how-to content. Definition-first content that explains concepts before diving into details performs better.

Research shows comparison pages account for nearly 30% of all AI citations across major platforms. Structure your content to match what AI systems prefer to cite.

Rule 6: Progressive Scaling

Never launch 10,000 pages on day one. Start with a test cohort of 100-500 pages in phase one. Monitor performance for 4-8 weeks in phase two. In phase three, scale winners while improving or pruning losers. Phase four involves continuous optimization based on performance data.

This approach minimizes risk and maximizes learning. You validate your approach before committing significant resources.

Rule 7: Track New Metrics

Traditional metrics remain important, tracking rankings position, organic traffic, click-through rate, and conversions. But you must also track AI search metrics, including citation rate (how often you're cited), grounding events (when AI uses your content to verify answers), visibility in AI Overviews, and mention rate in ChatGPT and Perplexity.

Both ecosystems matter in 2026. Success requires visibility in traditional search and AI citations working together.

Entity-First Architecture (vs Keyword-First)

The fundamental shift that determines programmatic SEO success.

The Paradigm Shift

The keyword-first playbook followed a simple formula. Find keywords with search volume using standard tools. Create pages targeting exact match keywords. Optimize pages with keyword variations throughout. Build backlinks to improve authority. Hope for good rankings and traffic.

The entity-first playbook works differently. Map your core business entities, your products, services, industry, and related concepts. Identify semantic relationships between these entities. Create interconnected content clusters that demonstrate relationships. Build knowledge graph signals that AI systems can recognize. Earn citations through demonstrated topical authority.

What Are Entities?

Entities are distinct, well-defined concepts that AI systems can recognize and understand:

People: Brand founders, executives, thought leaders

Places: Cities, offices, service locations

Organizations: Your company, partners, competitors

Products: Your offerings and their features

Concepts: Industry terms, methodologies, processes

Why Entities Matter

AI search logic asks "Is this entity authoritative for this topic?" rather than "Does this page have the keyword?" Google's Knowledge Graph understands relationships between entities, not just keyword matching. Citation behavior reflects this: AI systems cite sources with strong entity authority, not pages with high keyword density.

"Entity-based optimization is the future of SEO. AI systems are trying to understand what you are, what you do, and why you should be cited, not just what keywords you rank for.", Bernard Huang, Co-founder of Clearscope

How to Build Entity-First Programmatic Content

Step 1: Map Your Entity Network

Start by identifying your core entity, your main product or service. Then map related entities, including direct competitors, use cases and applications, industries you serve, integration partners, and methodologies you employ. This creates your entity network.

Step 2: Create Entity Definition Pages

Each major entity requires a comprehensive page that serves as its authoritative definition. Include a clear, authoritative definition of the entity. Explain relationships to other entities explicitly. Implement structured data using appropriate Schema markup. Add FAQ sections addressing common questions. Include comparisons to related entities where relevant.

Step 3: Build Semantic Clusters

Group related pages that reinforce entity relationships. For example, if "Marketing Automation" is your core entity, create a cluster including "What is Marketing Automation?" (definition), "Marketing Automation vs CRM" (comparison), "Marketing Automation for SaaS" (use case), "Best Marketing Automation Tools" (evaluation), and "How to Implement Marketing Automation" (process). Each page strengthens the entity's topical authority.

Step 4: Implement Structured Data

Use Schema markup to help AI systems understand entities. Implement the Organization schema to establish the company identity. Use the Product schema for offering details. Add Article schema for content type. Include FAQ schema for questions and answers. Use the HowTo schema for process guidance. This structured data helps AI systems parse and understand your content.

Step 5: Cross-Entity Linking

Link between related entities naturally within your content. For instance, write "Marketing automation integrates with CRM systems to track customer journeys and improve conversion rates." Each entity (marketing automation, CRM systems, customer journeys, conversion rates) should link to its definition page, creating a knowledge graph that AI systems can follow.

Quality Thresholds That Satisfy Both Google and AI

Specific requirements for pages that perform in both ecosystems.

The Quality Equation

The minimum viable page in 2026 requires:

500+ unique words (not 200)

30-40% differentiation from template

Structured format with headings, lists, and tables

At least one specific data point or insight

Schema markup implementation

Internal links to 3+ related pages

Content Depth Requirements

Word Count Targets

Pages with 500-700 words represent the minimum for AI consideration; anything less risks being ignored. The optimal range for most programmatic pages is 800-1,200 words, which provides enough depth for comprehensive coverage. Hub pages and high-value content should reach 1,500+ words to establish authoritative coverage.

Unique Content Percentage

Below 30% unique content creates a high risk of thin content penalties from Google. The acceptable minimum for programmatic scale is 30-40% differentiation from the template. Good differentiation reaches 40-60% unique content. Excellent differentiation exceeds 60%, though this becomes harder to maintain at scale.

Calculate this by comparing the page text to the base template. Unique content means content not present in the template, truly page-specific insights, data, and explanations.

Structural Requirements

Every page must have a unique H1 that clearly indicates the specific topic. Include 3-5 H2 subheadings that organize the content logically. Each page needs at least one comparison table, list, or FAQ section. Provide a clear answer to the primary query users are asking. Include specific data points or examples that add concrete value.

Pages should have H3 subheadings for additional depth and organization. Multiple distinct sections help users and AI systems navigate the content. Visual breaks using bullets and numbered lists improve scannability. A relevant call-to-action gives users a next step.

Specificity vs Generic Content

Generic content fails the AI citation test. For example, "Chicago is a great city with many restaurants to explore" provides no specific, citable value.

Specific content passes the test. "Chicago has 7,300+ restaurants, including 24 Michelin-starred establishments concentrated in neighborhoods like West Loop, River North, and Lincoln Park," gives AI systems concrete facts they can cite.

The difference is citable specificity versus vague generalities. AI systems need concrete facts they can reference with confidence.

AI Citation Requirements

To get cited by AI systems, pages need several critical elements. First, provide extractable answers, clear, direct responses to questions that AI can quote accurately. Second, include comparative information through "X vs Y" formats or feature comparison tables. Third, implement structured data using Schema markup to signal authority and help AI parse content. Fourth, ensure source worthiness by asking, "Would I cite this page if I were writing manually?" Fifth, maintain factual accuracy because AI systems cross-reference claims and reduce trust signals for content with errors.

The 5-Page Quality Test

Randomly select 5 programmatic pages from your site. Ask these questions about each one. Would I link to this page in my own content? Does it answer a specific question comprehensively? Is therea unique value versus competitors covering the same topic? Would AI cite this as authoritative? Could this page stand alone outside the context of the larger set?

If 4 out of 5 answers aren't "yes," your quality is too low for 2026 standards.

The Trade-Off

Higher quality means fewer pages you can create with the same resources. Budget and team constraints limit how much unique content you can produce. But the math has changed; 500 high-quality pages outperform 5,000 thin pages in the 2026 landscape. Quality at a moderate scale beats volume without quality. This represents a fundamental shift from the old playbook.

Content Types That Scale and Get Cited

Not all programmatic formats perform equally. Focus on what works.

Top-Performing Content Types

Comparison Pages ("X vs Y")

Comparison pages dominate AI citations, accounting for 30%+ of all citations across major platforms. They work because the structure is clear, and AI systems can extract information easily. They answer specific user questions directly. The format is easy to template at scale while maintaining quality.

Location-Based Pages

Location-based pages work well because places are natural entities in AI knowledge graphs. Local data provides built-in differentiation between pages. Search volume across many locations can be substantial. Strong commercial intent often accompanies local searches.

Integration/Compatibility Pages

Integration pages succeed due to technical specificity that adds clear value. They address clear use cases that users actively search for. Commercial intent is typically high. Natural data sources like API documentation and feature matrices support content creation.

Alternative/Competitor Pages

Alternative pages work because they target bottom-funnel decision intent. The comparison format aligns with what AI systems prefer to cite. Users are in the decision stage of their journey. AI systems frequently cite alternatives when users ask for options.

FAQ/Answer Pages

FAQ format pages excel because the question-answer structure is perfect for AI extraction. The format matches how AI systems respond to queries. Content is voice search-friendly. Structured data compatibility through the FAQ schema is straightforward.

Pricing/Calculator Pages

Pricing and calculator pages work because they provide specific, unique data. Commercial intent is very high. Interactive tool functionality adds substantial value. The unique value proposition differentiates you from competitors.

Content Types to Avoid

Pure listicles without depth fail to provide substantive value. "Top 10 [X]" with no analysis or detailed content doesn't meet quality thresholds. Location pages with no local data that just swap city names lack differentiation. Product descriptions alone, without comparison, use case, or evaluation context don't provide the structure AI systems prefer to cite.

Common Mistakes and How to Avoid Them

Learn from these frequent programmatic SEO failures.

Launching Too Fast

The problem is creating 10,000 pages without testing quality or performance first. The solution is starting with a 100-500 page test cohort, monitoring performance for 4-8 weeks, validating quality and engagement metrics, and scaling based on proven results only through progressive rollout.

Template-Only Content

The problem is pages that differ only in keyword insertion with no unique value. Fix this by requiring 30-40% unique content per page minimum, adding page-specific data points and insights, including conditional content logic with if/then variations, and manually reviewing 5-10% of pages as a sample before launch.

Ignoring User Intent

The problem is creating pages because keywords exist, not because users need them. The solution is validating actual search demand before creating pages, checking if existing results already satisfy intent adequately, testing 5-10 pages manually with real users, and implementing feedback loops and user surveys.

No Quality Control

The problem is adopting a "set it and forget it" mentality with no ongoing monitoring. Implement automated quality checks for word count and uniqueness percentage. Conduct regular manual audits at least monthly. Use performance monitoring dashboards. Execute monthly pruning of consistently low performers.

Weak Internal Linking

The problem is that programmatic pages are isolated from the main site architecture. Fix this with a hub-and-spoke linking structure, dynamic contextual linking between related pages, topic cluster organization, and strong site hierarchy integration.

Missing Structured Data

The problem is having no Schema markup or AI-readable formatting. The solution is implementing appropriate Schema types for all pages, using FAQ schema when applicable, creating structured comparison tables, and maintaining a clean HTML hierarchy with proper H1, H2, and H3 usage.

Focusing Only on Traditional SEO

The problem is optimizing for 2019 Google while ignoring AI search requirements. Fix this by tracking AI citations alongside rankings, optimizing for answer extractability, using comparative formats AI prefers, and creating genuinely answer-focused content.

Not Tracking the Right Metrics

The problem is measuring only rankings and traffic while missing AI visibility. Add to your dashboard AI citation rate showing mentions across platforms, grounding events showing content used for verification, visibility in AI Overviews, and mention frequency in ChatGPT and Perplexity. Traditional metrics still matter, but aren't the complete picture anymore.

The Prevention Checklist

Before launching programmatic pages, ensure quality minimums are defined at 500+ words and 30%+ unique content. Create and validate a test cohort with real data. Implement Schema markup across your template. Plan and test your internal linking structure. Set your indexation strategy for what to index versus noindex. Configure tracking for both traditional and AI metrics. Establish a manual review process. Plan your pruning and optimization schedule.

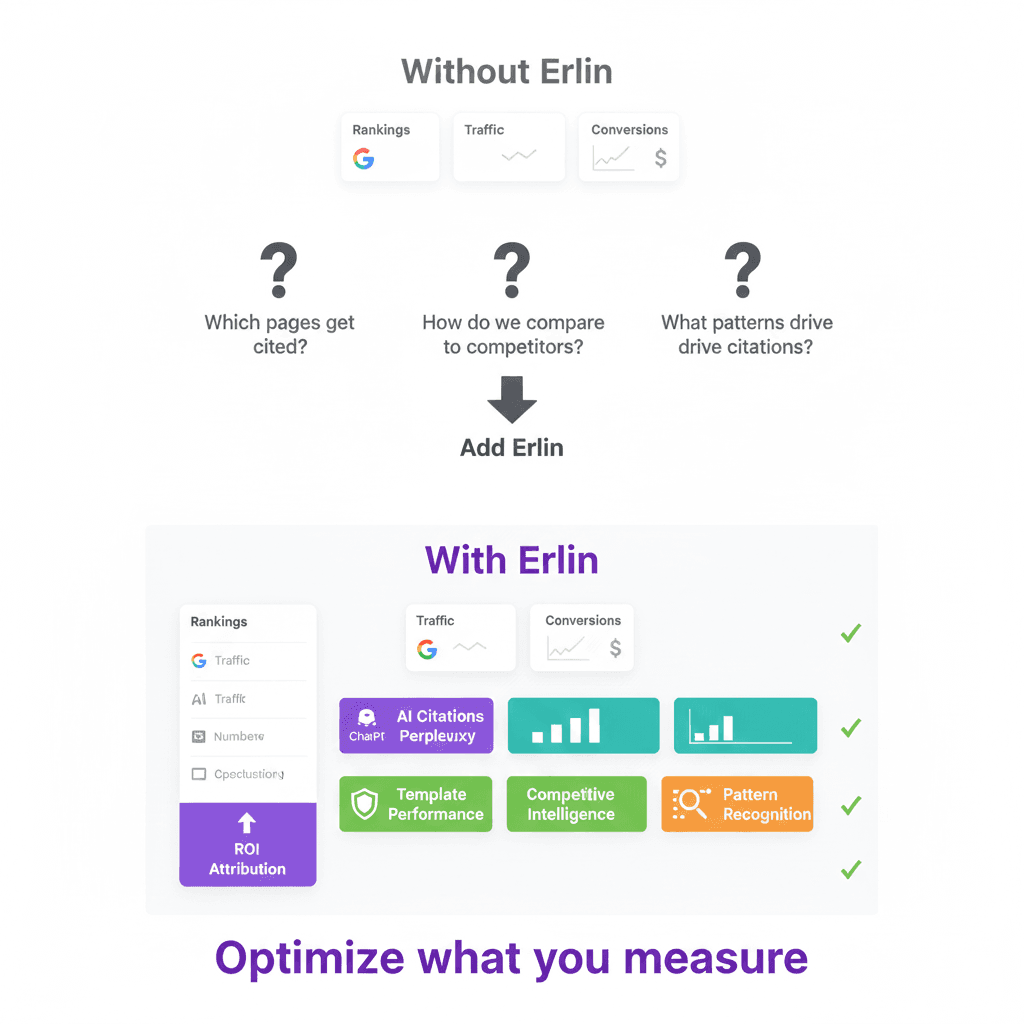

How Erlin Tracks Programmatic Performance Across Both Ecosystems

Most programmatic SEO guides stop at page creation. Here's what they miss.

The Programmatic Measurement Challenge

You've created 1,000+ pages systematically using best practices. You've invested time in templates, data collection, and quality controls. But can you answer these critical questions?

Which programmatic pages actually get cited by AI engines like ChatGPT, Claude, and Perplexity? How do our pages compare to competitors in AI visibility across different platforms? What content patterns and structural elements drive the most citations? Which page templates perform best across both traditional and AI search? What's our actual ROI from programmatic content in the AI era? Are we winning traditional rankings but losing AI citations, or vice versa?

Most teams can't answer these questions because they lack the measurement infrastructure to track performance across both ecosystems.

Tracking Performance Across Both Ecosystems

Erlin provides the visibility and intelligence layer for programmatic SEO in the AI search era. It tracks performance across both traditional search rankings and AI citations at scale, giving you the data needed to optimize systematically.

What Erlin Tracks for Programmatic Content

AI Citation Monitoring Per Template

For each programmatic page template, Erlin tracks citation frequency in ChatGPT, Claude, and Perplexity. It monitors grounding events in AI Overviews and tracks mention rate across AI platforms. The system also analyzes citation context to determine positive, neutral, or negative sentiment.

This reveals which templates actually work in AI search. For example, you might discover that "X vs Y" comparison pages generate 47 citations per week, location-based pages generate 12 citations per week, and FAQ format pages generate 31 citations per week. These insights enable data-driven decisions about which templates to scale.

Traditional + AI Performance Correlation

Erlin helps you understand the relationship between ranking and citation. Pages ranking in positions 1-5 typically see 2x the citation rate compared to positions 6-10. Pages with Schema markup are 3x more likely to be cited. Comparative formats show 4x the citation rate versus single-topic pages.

The system identifies specific gaps in your strategy. High rankings with low citations indicate a structure or formatting problem. Low rankings with high citations suggest an opportunity to expand and improve rankings. Neither ranking nor citations signals a fundamental quality or relevance issue that needs addressing.

Template Performance Analysis

Erlin compares different programmatic approaches with concrete data. For example, Template A using "Best [Product] for [Use Case]" might show an average ranking at position 7, an AI citation rate of 12%, a time on page of 2:30, and a conversion rate of 3.2%. Template B using "[Product A] vs [Product B] for [Use Case]" might show an average ranking at position 5, AI citation rate of 34%, time on page of 4:15, and conversion rate of 5.8%.

This data enables clear decisions. You can scale Template B confidently while optimizing or retiring Template A based on your business goals.

Competitive Intelligence at Scale

For your programmatic content categories, Erlin tracks competitor citation rates and frequency, share of voice in AI responses, gaps and opportunities in coverage, and positioning and sentiment insights.

You might discover insights like "In CRM comparison pages, you're cited 2x more than Competitor A but 50% less than Competitor B in ChatGPT responses." This intelligence informs strategic decisions about where to focus resources.

Content Pattern Recognition

Across all your programmatic pages, Erlin identifies patterns. What content characteristics consistently drive citations? What are the optimal word count ranges for your niche? Which structural formats perform best? Which Schema types correlate with citations? What entity relationship patterns work?

This pattern recognition allows you to learn systematically and scale what actually works rather than guessing.

The Complete Feedback Loop for Programmatic SEO

The system works in five phases. First, create systematically by launching programmatic pages with quality thresholds and dual optimization built in. Second, track with Erlin by monitoring both traditional rankings and AI citations at scale. Third, analyze patterns to understand which templates, formats, and approaches drive visibility in both ecosystems. Fourth, optimize data-driven by updating templates based on performance data, retire consistent low performers, scale proven high performers, refine middle performers with specific improvements, and test new variations strategically. Fifth, scale intelligently by expanding programmatic content based on proven patterns rather than assumptions or guesswork.

What Erlin Provides That Traditional Programmatic SEO Tools Don't

Traditional programmatic SEO tools handle keyword research and opportunity identification, template creation and page generation, page deployment at scale, and traditional ranking tracking. Erlin adds the critical missing pieces, AI citation tracking across platforms, grounding event monitoring, cross-platform AI visibility measurement, template performance comparison across both traditional and AI metrics, competitive AI visibility benchmarking, content pattern insights at scale, and dual-ecosystem optimization intelligence.

When to Use Erlin for Programmatic SEO

Consider Erlin when you manage 100+ programmatic pages currently, need to prove ROI in the AI search era, operate in competitive markets where differentiation matters, want data-driven template optimization, need to track performance in both traditional and AI search, or manage multiple content teams or template variations.

The integration is straightforward. Programmatic SEO creates content at scale efficiently. Erlin measures impact across both ecosystems and informs continuous optimization. Together, they create a systematic, performance-driven content advantage.

FAQ

1. What's the difference between programmatic SEO and regular SEO?

Regular SEO creates individual pages manually with unique content for each topic, typically handling 50-500 pages maximum. Programmatic SEO uses templates and automation to create hundreds or thousands of pages systematically targeting related keyword variations. The key difference is scale; programmatic targets 1,000+ variations efficiently, while regular SEO focuses on deeper manual work on fewer pages.

2. How has AI search changed programmatic SEO requirements?

AI search introduced several critical requirements beyond traditional rankings. Pages now need Schema markup, extractable answers, and comparative formats that AI systems prefer. Quality thresholds increased to 500+ unique words minimum with 30-40% differentiation between pages. You must also track new metrics, including AI citations, grounding events, and visibility in ChatGPT, Perplexity, and AI Overview, alongside traditional rankings and traffic.

3. What's the minimum content length for programmatic pages in 2026?

The minimum is 500 unique words per page, anything under 300 words carries a high penalty risk. The sweet spot is 500-1,200 words, depending on topic complexity, with hub pages reaching 1,500+ words. More important than total word count is the unique content percentage: you need 30-40%+ differentiation from your template to ensure each page provides distinct value.

4. Can I use AI tools to generate programmatic content?

Yes, but with proper oversight. AI tools can generate drafts and create variations efficiently, but you must implement human review of 5-10% of pages, strict quality gates for word count and uniqueness, and fact-checking processes. The best practice is pairing AI generation with human quality control, AI accelerates creation but doesn't replace editorial judgment.

5. How do I avoid the "traffic cliff" that affects 60% of programmatic implementations?

Start with a 100-500 page test cohort instead of launching thousands immediately. Maintain quality minimums of 500+ unique words and 30-40% differentiation with Schema markup on every page. Use progressive rollout with monitoring between phases, conduct monthly audits, and prune low-performing pages. If you can't defend each page's value to a human reviewer, search engines won't value them either.

6. What content formats work best for AI citations?

Comparison pages ("X vs Y") account for 30%+ of AI citations. FAQ format with question-answer pairs, integration guides with step-by-step processes, alternative/competitor pages, and structured lists with specific data all perform well. Avoid pure listicles without depth, thin location pages without local data, and keyword-stuffed content without structure.

7. How long does it take to see results from programmatic SEO?

Indexation takes 2-4 weeks, initial rankings appear in 4-8 weeks, and meaningful traffic growth occurs after 3-6 months. AI citations begin appearing in 2-4 months if quality and structure are high. Meaningful ROI typically requires 6-12 months. Results depend on content quality, competition level, domain authority, and implementation expertise.

8. How does Erlin help specifically with programmatic SEO?

Erlin tracks performance across both traditional rankings and AI citations simultaneously. It compares template performance, identifies content patterns that drive citations, reveals competitive gaps, and provides clear ROI attribution. While most tools help you create pages, Erlin shows which pages actually perform in both ecosystems, enabling data-driven decisions about which templates to scale.

9. What's the ROI timeline for programmatic SEO in 2026?

Investment ranges from $5,000-$50,000+, depending on scale and resources. Positive ROI typically occurs within 6-12 months with proper quality standards. Higher quality pages see faster ROI despite requiring more upfront investment. The long-term value is strong, quality programmatic content continues driving results for years with minimal ongoing investment.

10. Should I focus on traditional SEO or AI search optimization?

You need both. Research shows 40.58% of AI citations come from Google's top 10 results, meaning you need traditional rankings to get AI visibility. But ranking alone isn't enough, you also need structured, citable content that AI systems can reference. The winning strategy implements dual optimization from day one, treating these as complementary requirements rather than separate efforts.

The future of programmatic SEO belongs to teams that balance scale with quality, traditional rankings with AI citations, and automation with intelligence. Start building your systematic advantage today.

Ready to track how your programmatic content performs in both traditional and AI search? Explore Erlin to see which pages drive citations and visibility across both ecosystems.

Sid Tiwatnee

Founder

Share

Boost your brand’s visibility in AI search.

See where you show up, spot what you’re missing, and turn AI discovery into revenue.